4 Best Practices for Valid Email Surveys

Table of Contents

There are multiple reasons why you should be using email surveys as part of your marketing program. However, they all boil down to increased customer satisfaction and increased marketing intelligence. When properly implemented, email surveys give your customers a chance to directly tell you their feelings about your products and services. With the right combination of subject lines , relevancy, design, and content, surveys have the potential for high open and response rates.

Before we get into the steps for designing and implementing surveys as part of your marketing campaigns, let's establish some basic principles to maximize survey effectiveness.

- Precise, Painless, and Quick: Surveys need to be precise. Most customers actually enjoy having the ability to provide honest feedback about brands they interact with. But they don't enjoy spending too much taking surveys, nor do they enjoy answering a lot of irrelevant questions.

- Timely: Surveys need to be timed correctly to maximize valid intelligence. When you're planning your next email marketing campaign, think about the best practical time to include a survey. Generally, you want to ask subscribers to take your survey at the end of the campaign. For example, if you are launching a new product, and have a 12 stage drip campaign ready to go, a survey in the beginning wouldn't make sense since your sales are usually better at the end of a campaign as opposed to the beginning.

- Not too Frequent: The opposite of not conducting enough surveys is asking customers to take too many of them. Not only does this make customers frustrated, it makes for a bunch of data that may be useless. Again, it's worth noting that surveys as part of your email marketing campaign need to be well planned.

- Direct, Clear Language: The chief enemy of high survey response rate are vague, irrelevant, misunderstood, and seemingly unanswerable questions. If the survey taker needs to guess what you are asking, you'll get a lower response rate, a higher incomplete response rate, or even worse, bad data.

- Easy to Analyze: Having survey data at your disposable is irrelevant if you can't take the time to analyze – and contextualize – the results. When designing the survey, it's important that you can analyze the results in a way that makes sense to you. Simpler surveys benefit the analyst (that's you) and the taker.

With those principles laid out, we can dive right into the best practices for email marketing surveys.

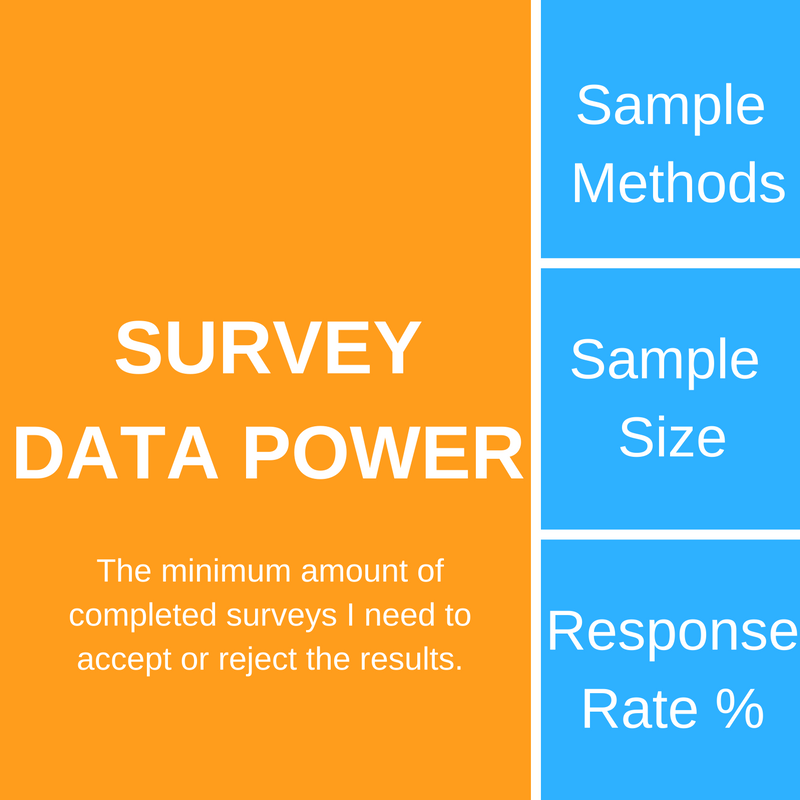

1. Sampling Method, Sample Size, and Response Rate for Email Surveys

Surveys, like all statistical methods, are bound by certain rules that need to be met in order to declare the results valid. The top three rules for surveys involve sampling methods, sample size, and response rate. It's only when all these rules are met can you go to the next step of analyzing and discussing the results. Sampling, sample size, and response rate form the basis of something called statistical power. Power refers to how many times you'll detect significant differences in what you expected the survey answers to be and what they actually are. Larger sample sizes usually lead to higher response rates.

The more responses you get, the greater the power of your results.

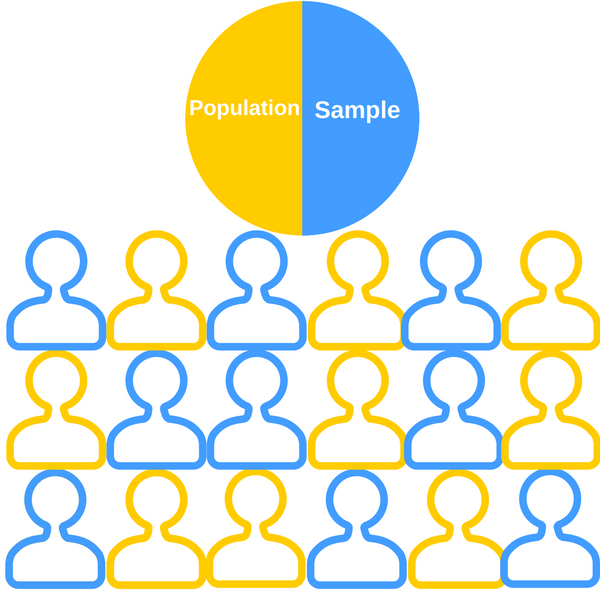

But for the responses to be valid, they need to come from a true representation of your subscribers who interacted with the campaign. The best form of sampling is called simple random sampling (SRS). In SRS, subscriber A gets the same chance to take the survey as subscriber B, and subscriber Z gets the same chance to take the survey as subscriber B. All of your subscribers who receive a certain email marketing campaign are called your population.

The image below represents 18 subscribers who all have the same chance to be a part of a survey meant to be distributed to 50% of the population.

9 subscribers were chosen randomly. However, all subscribers had the same opportunity to be chosen.

Sampling in the context of an email marketing campaign is pretty easy: towards the end of the campaign, ask all of your customers to fill out a survey. Everyone who received the campaign emails will have a chance to take the survey, increasing the likelihood of a higher response rate. This method is simple and effective, but it may not always be optimal for these reasons:

-

Too Much Data to Analyze: The method of sampling is based under the premise that a random percentage of your subscribers will give similar answers to the same survey as your populationw ould. If you have many subscribers and receive a high response rate, you'll be spending a lot of time analyzing survey data when a fraction of the responses will give you the same results.

-

Segmented Intelligence: Asking all subscribers to take a survey at the end of a campaign is a great way to get direct feedback. However, there is a strong possibility that those who answer at the end of a campaign may give different responses than those who dropped out of the campaign halfway through. Sampling gives you the opportunity to segment your subscribers by different campaing interaction grades. This is also known as cluster sampling, or sampling your population based on certain behaviors or characteristics.

-

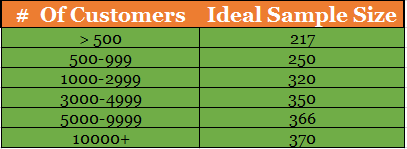

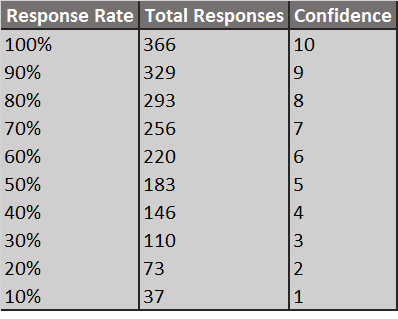

No Additional Information Gained: This sample size chart says it all.

If you have 5,000 subscribers as part of a campaign, you only need 366 randomly sampled responses to give you valid customer intelligence.

However, you need to plan for low response rates. You may only need 366 responses for 5,000 customers, but if your response rate is only 20%, you need to include more subscribers in your sample. A good rule of thumb for most email marketing campaign scenarios is to sample double the amount of survey respondents you think you'll need .

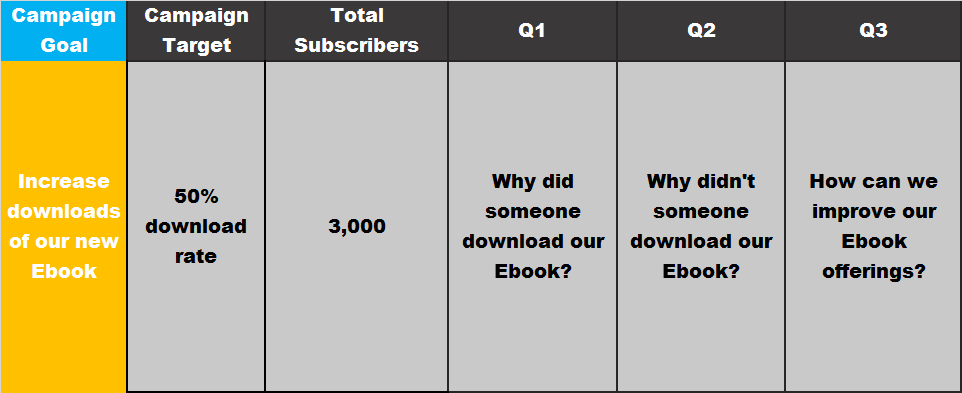

2. Developing a Targeted Hypothesis

A survey needs to have direction and be limited in scope. A precise hypothesis eliminates what you want to ask and shifts the focus on what you should ask.

A hypothesis is based on the goals of the campaign. If the goal of your email marketing campaign is to launch a new product or service offering, you'll probably want to know why people bought the new product / service, why they didn't, and why / why not. But if the goal of the campaign is to increase downloads of a coupon, you may want answers to completely different questions.

The first step in developing a targeted hypothesis is defining the campaign, campaign goals, total number of subscribers the campaign will reach, and some questions you'd like answered from the subscribers at some point (usually then end) of the campaign.

Next, you'll want to set up some rules for how you will evaluate the data. These rules set the guidelines for how you can apply customer intelligence.

First, declare a minimum required response rate. Going back to our sampling rules, an email sent to 5,000 subscribers needs 366 completed responses. However, getting a 100% response rate is highly unlikely. A minimum required response rate sets cut off points and confidence metrics for different levels of response rates.

In this scenario, if half of the sampled audience sends back a survey, the campaign isn't fully confident (5) that the results demonstrate a true representation of subscribers. A general cutoff point is usually between 50% and 70% response rate. Anything lower, it's probably best to either scrap the survey or re-sample 366 random subscribers.

With these parameters set, we can finally develop a targeted hypothesis. If you were to ask the survey question, "Please rate our Ebook on a scale of 1-5," your following hypothesis could be:

Hypothesis: "I believe, at between a 70% and 100% response rate, 50% of respondents will rate our Ebook a "3" or higher."

This can seem like a lot of ground work for such a simple step. But nothing is worse for a campaign than bad data. It's not uncommon to see a 20% response rate where 66 out of 73 respondents rank the Ebook a "4." It feels good to see the number 4 until you look at the chart and realize that, with only 20% of respondents, your confidence in accuracy of the results is only a "2." "2" much closer to "0" than "5".

3. Survey Design

Email surveys need to be enticing. In practice, they are no different than crafting open-worthy subject lines, or taking the time to create clickable design and content layouts.There are a few options at your creative disposal.

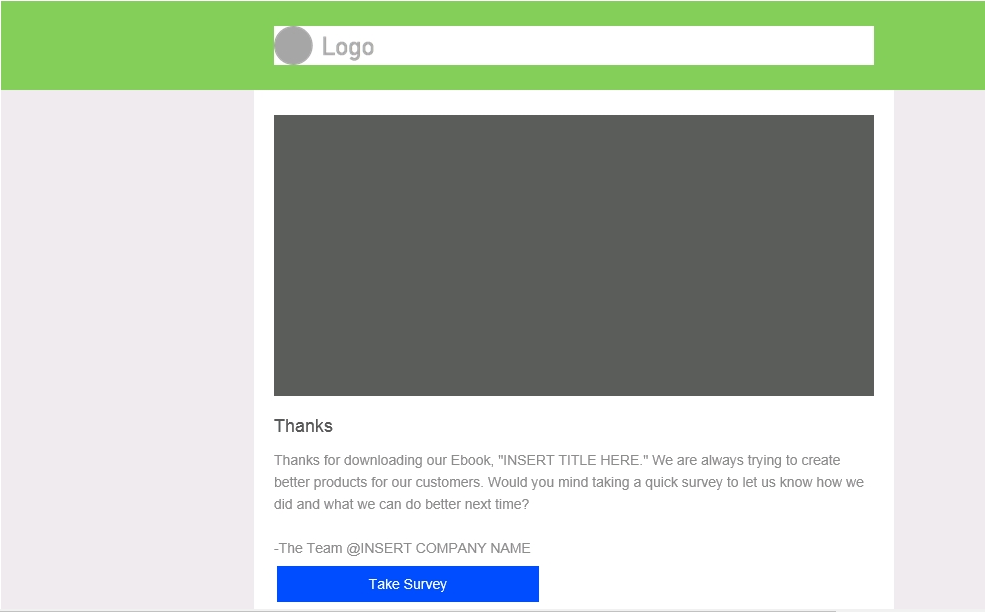

The first is a "survey only" email. Just like the name says, this is an email you send to your subscribers that contains a message which asks them to click on a link and take a survey. This is something you can do easily in the Pinpointe Drag-and-Drop Builder, just like I did below:

Because surveys take a considerable amount of time to write, design, and analyze, they deserve their own class of dedicated emails. The other option is to ask subscribers to take a survey as part of another email.

This is not the best approach to obtaining a high respnse rate. There are too many other content blocks to interact with before subscribers are asked to take a survey. Should you ever want to A/B test your survey design structure, the additional email elements make valid test results difficult to obtain.

The final option that is sometimes used for long-term studies it to always give subscribers the chance to take a survey. Each email you send contains a link to a survey. This is useful if you want to track responses over time to certain questions. This method can be difficult to analyze because of factors like duplicate responses and changes that may happen that impact the survey results. It's best left to general, non-product or non-service related questions. For example, you would use a long-term survey if you were interested in knowing how many times per week your subscribers wanted an email from your business. You could also use a long-term option if you were curious about something completely unrelated, such as how many blogs, on average, do you subscribers read on a monthly basis.

The simplest and most effective way to get high response rates is to give surveys their own email, making them an important part of your email marketing campaign.

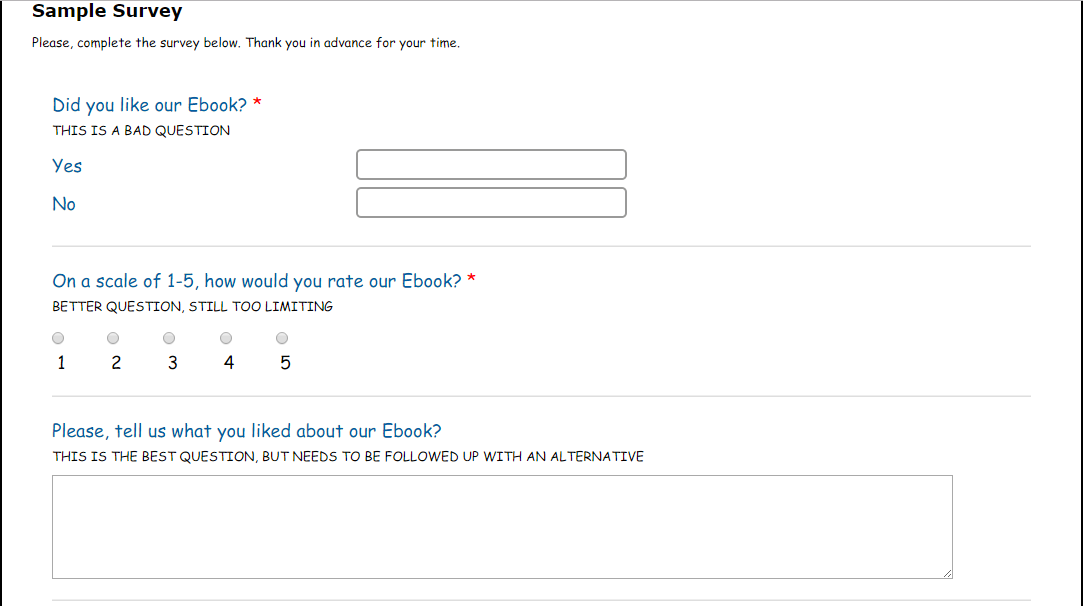

4. Writing Statistically Valid Questions

There have been entire books written on how to write statistically valid email survey questions. Most of those books, however, aren't written with marketing in mind. They also don't take into account factors such as email personalization, time available to take the survey, and practicality of the results. As a marketer, you have to walk the fine line of creating valid survey questions that also yield answers in the true tone and voice of the customer.

Here are some best practices to follow when writing your next survey:

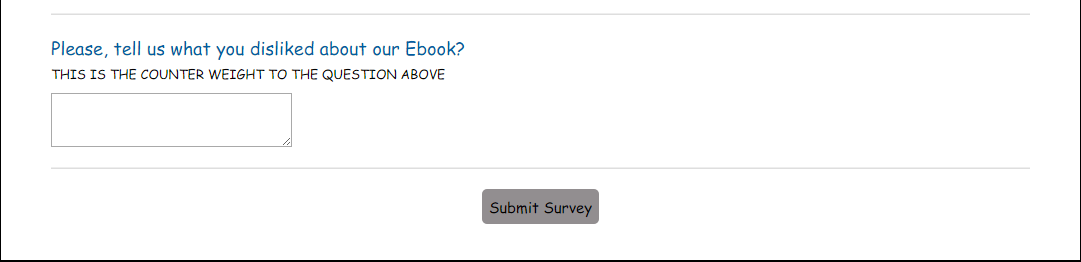

- No Leading Questions, Ever: A leading question leads a customer to a certain answer. A leading question could be, "In your opinion, how good was our Ebook?" A better variation of the question is, "Please list anything you particularly liked about our Ebook?" However, that question would still be leading if you didn't follow up with, "Please list anything you disliked about our Ebook?" Leading questions can quickly build upon themselves without careful balancing.

- One Answer to One Question: Surveys commonly ask questions to subscribers that ask them to give one answer for two or more questions. In the case of the Ebook download, a double loaded question may be, "On a scale of 1-10, please rate the design and content of our Ebook." This question should be split into at least two questions: "On a scale of 1-10, please rate the overall content of our Ebook" and "On a scale of -10, please rate the overall design of our Ebook."

- Avoid Limiting Questions to Avoid Limiting Answers: Avoid "Yes or No" questions such as, "Please answer Yes or No: Did you like our Ebook?" These questions force customers to answer a complex question with a simple response. It's very rare that simple responses yield the best intelligence. Good survey questions do their best to capture a wide range of customer responses. Though they take longer to analyze, open responses are the best for complex questions.

- Give Rating Scales Categories: Asking people to rate items on a scale of 1-10 naturally biases questions. Whose scale of 1-10? The writer of the survey has a much different thought of what a "5" is than thoe who will take the survey. However, these questions are usually necessary to ask, as they allow for exact response comparison within hypothesis expectations. In order to clarify what the numbers represent, it's best to assign each number a category. For example, a "1" could be coded as "poor" and a "10" coded as "perfect / no issues."

- Offer Rating Bins: When possible, create bins for questions. If the question is, "How many webinars did you attend in the past month," you could leave the question open ended (precise, but difficult to analyze) or create 5 bins: "0", 1-5", "6-10", "11-15", and "16 or more." Bins offer the flexibility to easily compare loaded, but well explained, data points. They are especially useful when a range of responses is acceptable.

-

Personalization: The language of your survey questions needs to be as natural as possible so a wide range of subscribers understand what you mean. Perhaps you're interested in finding out what device most of your subscribers are using to read your Ebook. Asking a question like:

"On what device and client did you mostly read the Ebook on? Please select from the choices below" isn't natural. But:

"Please, select from the choices below on which device you're most likely read Ebooks on" is a bit clearer.

If the survey is difficult to understand, or doesn't speak in a natural, conversational tone, completion rates tend to plummet. - Be Direct: If you ask the question, "What did you think about the design of our Ebook," be prepared for a variety of responses. Some will concentrate only on the cover of the Ebook, while others will offer commentary on the layout of the content. Some may just respond to the color palette choice. The less direct and precise a question the more variance you'll get in your answers. A better question would be an open response item that asks, "What did you think about the design of our Ebook cover?" Yes, you will still have some variance in the answers, but at least the answers only involve a single item.

- Avoid Too Many Questions: Going back to the hypothesis stage, a survey should have a strict purpose. If you are designing a product review survey, you'll need to decide if the purpose of the survey is to get feedback on how a customer liked/disliked the product or if the purpose is about gauging the user experience. Though these may seem like similar surveys, both paths provide two different sets of intelligence. Because customer time and willingness to complete surveys is limited, the survey that delivers the best outcome for the next stage of your business is the best choice.

Best Practices Equal Best Results

For how much intelligence surveys offer, they are generally underused by marketers. Valid surveys take a lot of the guess work out of analyzing how customers received your prior campaign and what they want in the future. Surveys can provide customer feedback, validate concepts, and offer insight into what you should be doing next. But the most important reason to include surveys as part of your email marketing campaign is that the results are sure to challenge any of your existing assumptions.

Pinpointe Newsletter

Join the newsletter to receive the latest updates in your inbox.